Font Selector | Fabrie Write(AI)

👷editing…

AI Assistant:

Fabrie Write

Product design: Muki

UX design: Muki

UI design: Mao, Gaoyi

Prompt Engineer: Yiqi, Xiran, Muki

01

Framing

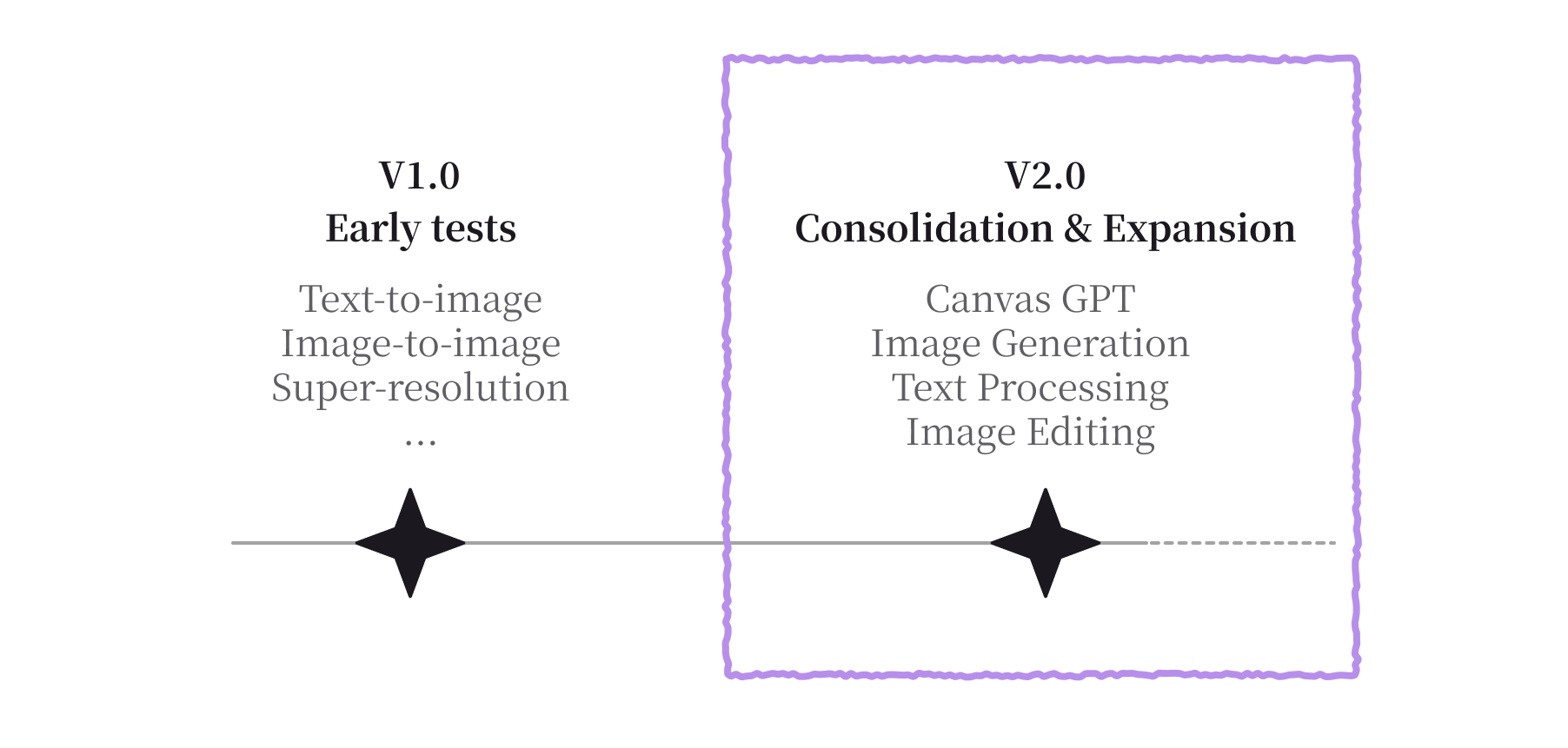

In 2023, after a year of launching the Fabrie whiteboard and completing a major technical refactor, the team started experimenting with AI features. Early tests (v1.0) included basic image-related capabilities such as text-to-image, image-to-image, and super-resolution. These experiments validated user interest, but the features remained lightweight and loosely connected to the core whiteboard experience.

As Midjourney and ChatGPT gained massive traction, many tools expanded toward “AI + whiteboard” scenarios. For Fabrie, the whiteboard was already a natural canvas for AI-generated content, presenting an opportunity for feature expansion and product differentiation.

ChatGPT - chat-style, timeline-based interactions

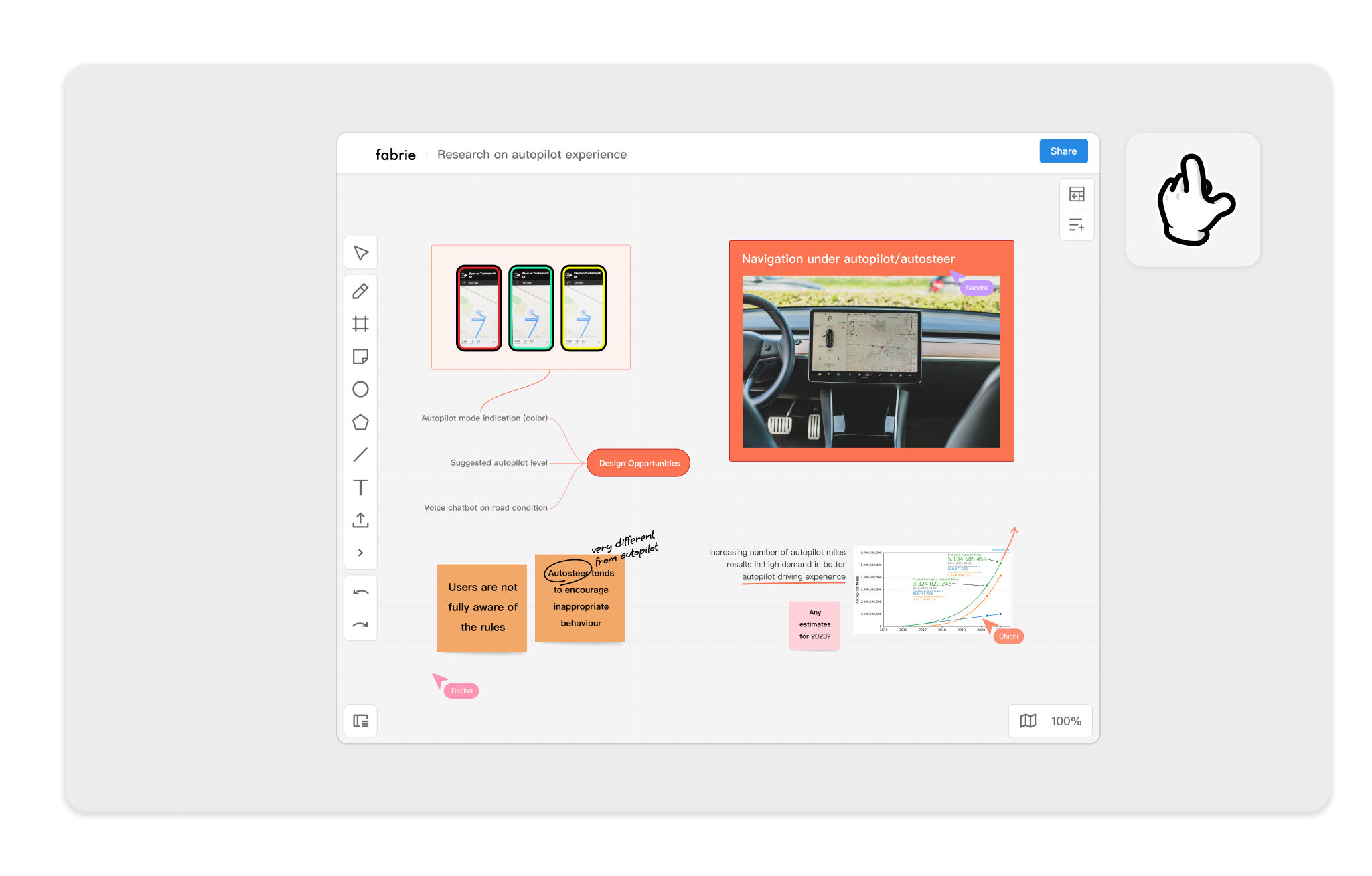

fabrie - where AI meets whiteboards for spatial collaboration

However, most AI features on the market were limited to chat-style, timeline-based interactions, focusing mainly on text or image generation. Fabrie’s real strength lies in spatial collaboration. Simply embedding a chat window into the whiteboard would neither create differentiation nor bring meaningful value to our users.

Challenge

How might we leverage Fabrie’s spatial strengths to design AI features that truly enhance collaboration and document value—rather than becoming just another replaceable chat plugin?

02

Dicovering

I analyzed several AI tools (ChatGPT, Notion AI, Gamma, Tome, Miro, etc.) to understand differences in feature types, interaction patterns, and integration with core products.

Mainstream interactions are concentrated in chat windows or command-based sidebars

Most features focus on content generation, with limited integration into spatial objects

Two Core Capabilities of AI

Based on this research, AI capabilities can be broken down into two steps:

Step 1: Natural Language Processing — understanding user text input

Step 2: Content Generation — producing images, text, low-code, executable code, or video

Create from scratch

Within Step2: Content Generation, we identified two distinct modes:

Create from scratch: generating entirely new objects (e.g., sticky notes, mind maps)

Enhance existing: modifying current objects (e.g., change color, adjust style)

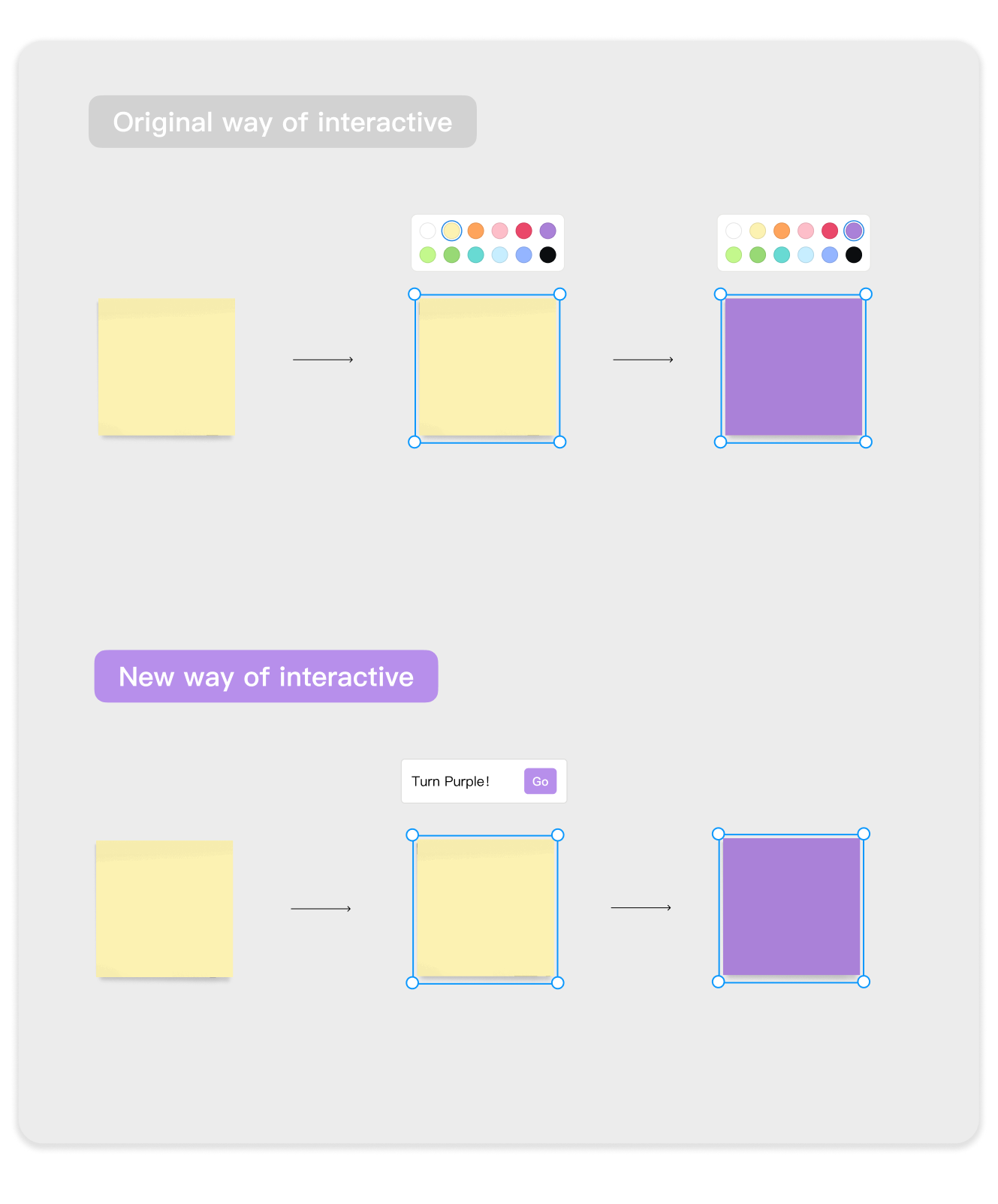

Interactive: Traditional vs AI

Traditional commands rely on fixed buttons (e.g., pressing “Back” to return to the previous page)

AI commands rely on understanding natural language, breaking the one-to-one mapping between command and task → offering greater flexibility but also higher uncertainty

03

Ideation

Challenge

In v2.0, the challenge became concrete: How might we introduce natural language input as a new interaction model, while making AI capabilities feel native to whiteboard objects and spatial workflows?

We approached this challenge from a function-first perspective.

Feature scope (by category):

Image Generation: generate images based on prompts

Text Processing: summarize, continue writing, extract tasks, expand details, split paragraphs, translate, refine text

Image Editing: background removal, OCR text recognition, upscaling, AI-assisted edits

Template Generation (Canvas GPT): mind maps, brainstorming, pros & cons, business model canvas, stakeholder maps, timelines, data tables

From these functions, I categorized interaction needs into two types:

Low-customization needs (e.g., translation, background removal) → retain button-triggered actions

High-customization needs (e.g., generating complex illustrations, custom templates) → introduce natural language input